Scaling The Learning Cliff

When I started trying to learn how to build recommender systems, my experience mirrored the experience people report in many domains: there were lots of materials aimed at total beginners and lots of materials aimed at people who were already experts, but almost nothing in between.

It's relatively easy to find tutorials about how to train simple recommendation models (e.g. matrix factorization) and easy to find papers about how to build fancier models (e.g. collaborative denoising auto-encoders.) It's not too hard to find books that will teach you about the variety, history, and evolution of approaches to recommendations.

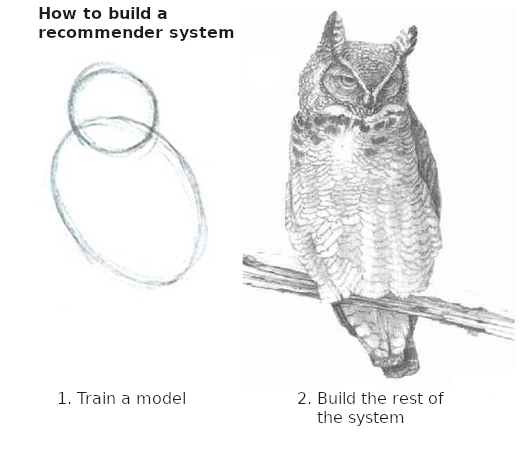

These are all great resources, but they don't entirely prepare you for building real-world recommender systems. In particular, they tend to put significant emphasis on modeling and leave out a lot of practical information about using models to build systems. Recommendations serve both user and business goals that transcend pure relevance prediction, so building recommender systems requires a wider set of skills than training and tuning models. A lower loss is not the same as a better recommendation product!

Real World Recommenders

In order to understand real-world recommender systems, it's helpful to understand their typical structure. There are usually four parts, give or take:

- Candidate Selection: choosing a subset of items from the full catalog that are likely to be relevant

- Filtering: narrowing the pool of candidates by applying business logic to remove any obviously inappropriate items

- Scoring: estimating the likely value of each item to the user (which includes but is not limited to relevance)

- Ordering: selecting and arranging high-value items to create an appealing list or slate of recommendations

A typical tutorial gloms them all together like this:

- Candidate Selection: all the items.

- Filtering: none.

- Scoring: straight from the model.

- Ordering: descending by score.

But that's not particularly realistic. In the real world:

- We usually can't afford to score all the items for every user, because the processing time is too long (both for offline batch job length and online serving latency.) So, we need ways to choose a subset of the total catalog of available options that reliably surface potentially relevant items.

- We want to have the option to focus on or exclude items in particular categories (e.g. genres, potentially offensive material, items a user has already interacted with.) We'll need some way to efficiently filter retrieved candidate items to the ones that are worth scoring.

- Relevance isn't the only desirable quality in a set of recommendations, but it's often the only one we can train a model to recognize. Variety, novelty, and serendipity (among other qualities) also improve the user experience, so we'll want ways to adjust raw relevance scores to take them into account.

- We don't want to present a set of recommendations that are all the same, so there are more concerns for ordering than (even adjusted) relevance scores. We often want to present a list of options that functions more like a personalized restaurant menu than an omakase dining experience.

What's Next

Let's start with a trained matrix factorization model and work forward from there, addressing the practical challenges that are likely to crop up when you try to build a recommender. I'll be using:

...and whatever other tools turn out to be useful along the way. (There are other resources out there for building ML data pipelines, feature stores, and model servers which are generally useful for recommendations too, so I will likely omit those parts in the interest of space and simplicity.)

Hopefully this will make it easier to get up the middle part of the learning curve, and save you some frustration as you go from model to system.

Comments !